1space Multi-Cloud¶

1space Overview¶

1space creates a single object namespace between object API endpoints. This enables applications to access data transparently between public cloud and on-premises resources.

1space enables you to manage your data whether it is in your SwiftStack cluster on-premises or in the public cloud. With flexible data management policies, 1space allows you to position your data where you can best take advantage of it.

For example, 1space can provide a lifecycle profile which automatically moves older data to the cloud to make room for new data on-premises. Or, data can be mirrored to a public cloud to take advantage of its compute services. 1space can also be used for live migration of data from a public cloud, or another private cloud storage location to a SwiftStack cluster.

Because of the single object namespace, data is accessible regardless of where the data is stored. The 1space Cloud Connector can be deployed in the public cloud to provide access to the single object namespace.

1space can be configured to support many needs and workflows. Please read through the feature documentation below and review the example use cases. For any questions or configuration assistance, please contact SwiftStack Support.

Deployment Options¶

1space is available with the following deployment configurations.

- Lifecycle – Move data to cloud storage

- Sync / Mirror – Copy data to cloud storage

1space Lifecycle and Mirror is software that operates on an on-premises SwiftStack Cluster. From its point of view, Lifecycle / Mirror are pushing data to a remote storage location.

Configuration Options¶

1space offers the following configuration options.

| Configuration | Lifecycle | Sync/Mirror | Cloud Connector | Migrations | Description |

| Single Namespace | Yes | Yes | Yes | Yes | Merge a SwiftStack Cluster bucket/container with an cloud bucket/container |

| Restore on GET | Yes | No | No | Yes | Store a local copy of data upon access, if accessing from “remote” location. For, migrations a GET request would “jump the queue” from planned migration. For Lifecycle, the configured lifecycle policy would restart. |

| Propagate DELETE option | Yes | Yes | No | Yes | Forward delete request to remote storage location. |

| Do not propagate DELETE option | Yes | Yes | No | No | Do not forward delete request to remote storage location. |

| Lifecycle Trigger: Immediate | Yes | No | No | No | Move data after specified time interval (in days). |

| SwiftStack Namespace Prefix | Yes | Yes | Yes | No | Use the recommended naming prefix in remote storage location to reduce odds of namespace conflict in remote destination. |

| Custom Namespace Prefix | Yes | Yes | Yes | Yes | Enable migration of preexisting data, or lifecycle data to a preexisting storage location. |

| Metadata-based Triggers | Yes | Yes | No | No | Enables the ability to trigger lifecycle or Sync/Mirror policies based on object metadata. |

| Convert DLO to SLO | Yes | Yes | No | No | Allows Dynamic Large Objects (DLO) copied to the remote Swift endpoint to be stored as a Static Large Object (SLO) |

| Exclude matching objects | Yes | Yes | No | No | Skips objects that match a given name when copying them. Useful if large object segments are stored in the same container, for example. |

Cloud Storage Targets¶

1space works with Amazon S3, Google Cloud Storage, and SwiftStack storage clusters. It may also work with other S3- or Swift-compatible systems. See the table below, and please contact support if you have any questions regarding your use case.

1space supports the following targets.

| Cloud Storage | Lifecycle | Sync/Mirror |

| AWS S3 | Yes | Yes |

| Google Cloud Storage | Yes | Yes |

| Microsoft Azure Blob | Yes | Yes |

| SwiftStack Cluster | Yes | Yes |

| Other qualified AWS S3 API endpoints | No | No |

| Other qualified OpenStack Swift API endpoints | No | No |

Configuring 1space¶

Multiple Swift containers can be mapped to a single AWS S3 or Google Cloud Storage bucket. Each 1space mapping consists of the Swift account (e.g. AUTH_test), container, the cloud container (bucket), and a set of cloud credentials.

For Microsoft Azure Blob and Swift, each SwiftStack container is mapped to a single container in the remote blob store.

The processes doing the replication reside on the Swift container nodes. These nodes need network access to the cloud API endpoint in order to replicate the data.

Warning

Beware of using the same bucket for multiple SwiftStack clusters. In that case, if the same account and container exist on two clusters, one cluster may overwrite objects from the other in the bucket.

All of the 1space administration is performed through the 1space tab, on the left side in the Cluster management view. The subsequent sections assume that you have already navigated to that section.

1space Cloud Credentials¶

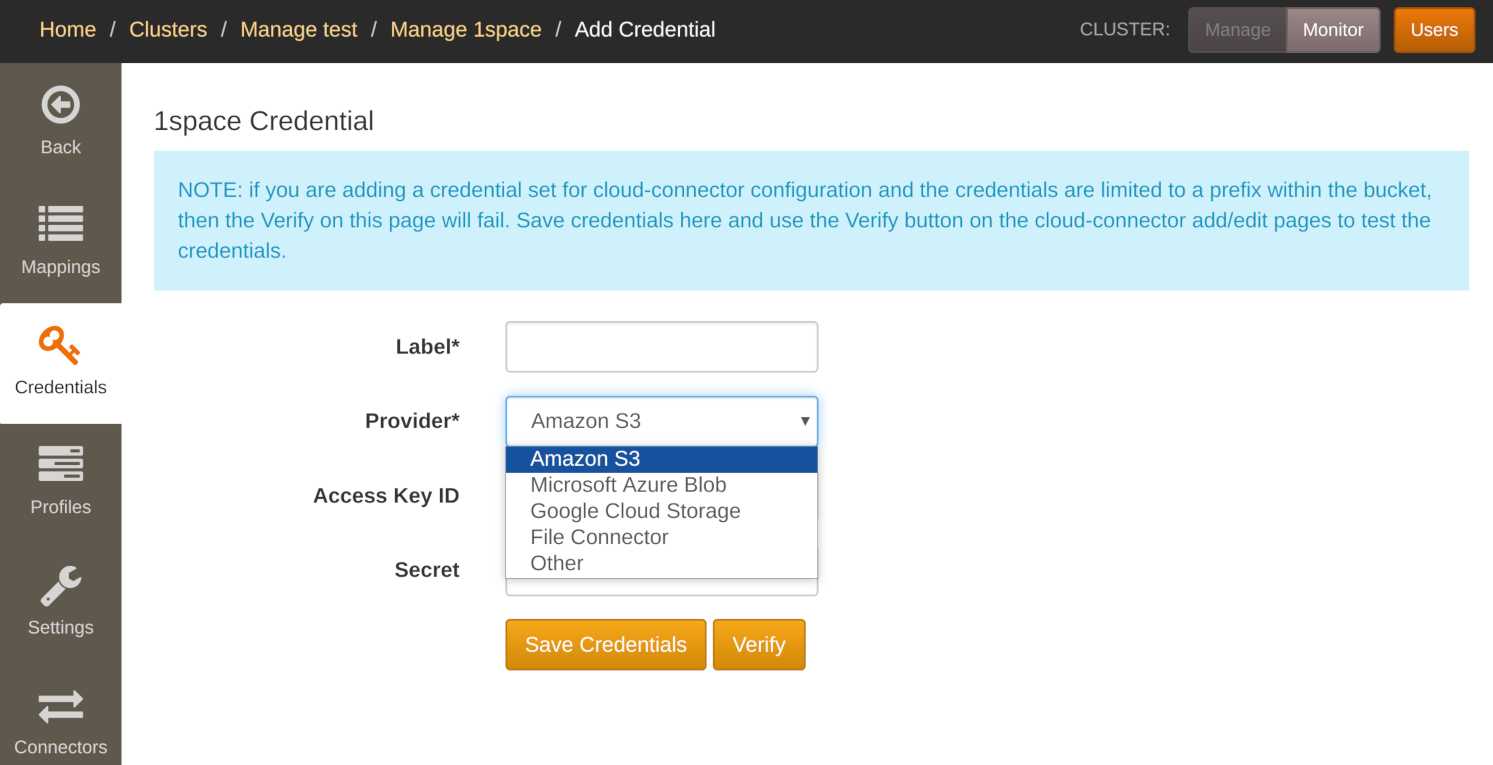

Before adding a mapping, a set of credentials must be created. To setup credentials, navigate to the 1space tab in the manage cluster interface. Follow these steps to configure specific providers:

- Click on Credentials

- Add credentials, by specifying a friendly Label, Access Key ID, and Secret Access Key

- Select Amazon S3, Google Cloud Storage, Azure, File Connector, or Other (for Swift or a non-AWS S3 endpoint), depending on which provider you'd like to use.

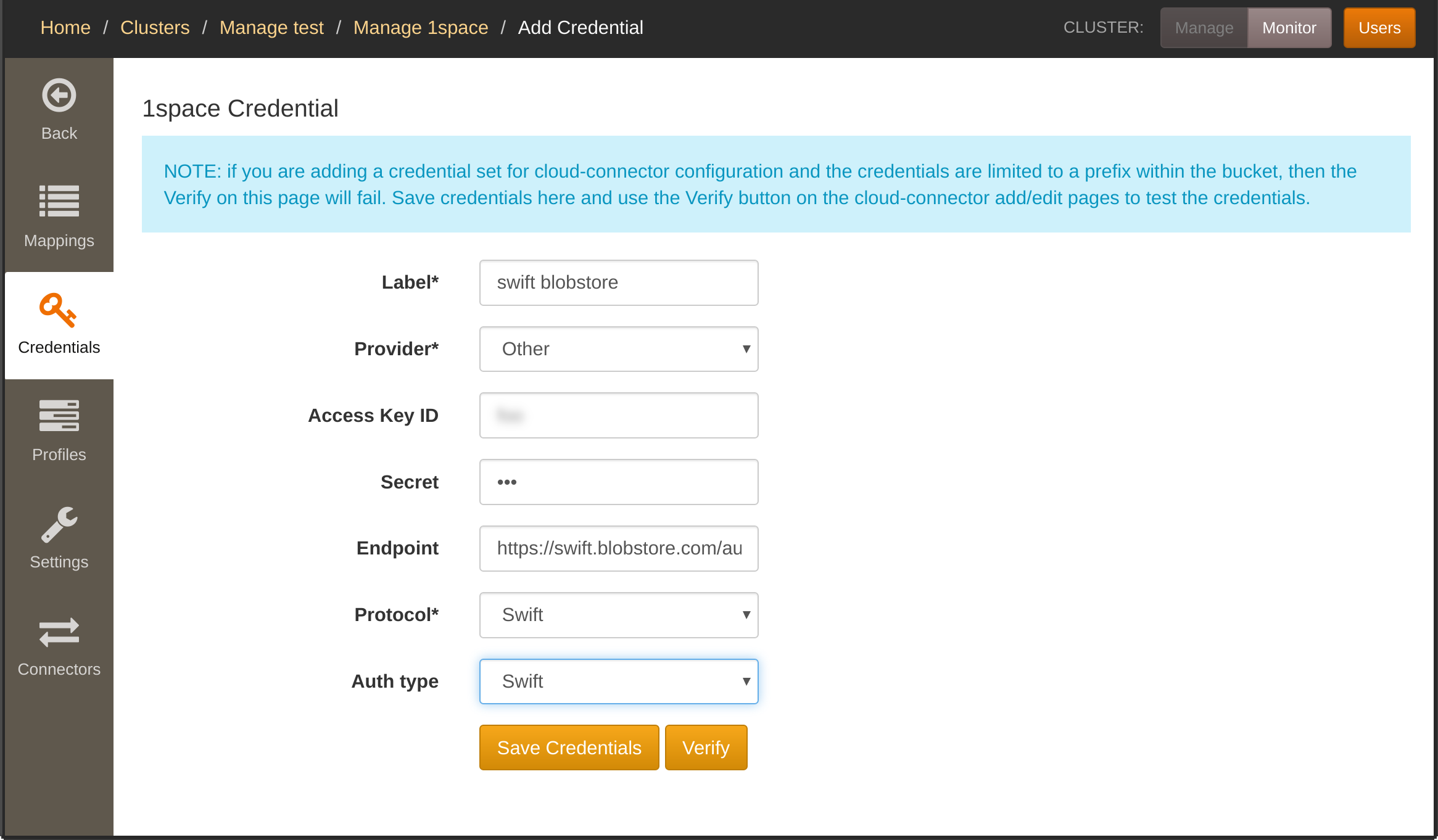

For File Connector, Swift, and a non-AWS S3 object store, you also must enter the authentication endpoint.

For Swift, you have to also select the authentication type. 1space supports Swift v1 authentication, as well as Keystone v2 and v3. Each Swift authentication type has its own specific credential fields.

Afterward, you can edit the credentials (for example, if the secret keys are rotated). Each set of credentials for a given cluster must have a unique identitifer, i.e. you cannot add multiple credentials with the same Label.

After editing the credentials, you must deploy the configuration for the changes to go into effect.

You can validate your credentials before submitting them. The validation takes place on a node in the cluster. You will not be able to validate your credentials if there are no nodes in the cluster. This validation step only attempts to list buckets/containers to ensure that the credentials are recognized by the service provider.

Note

Google Cloud Storage Interoperability mode must be enabled. See Configuring S3 Interoperability in Google Cloud Storage

Note

When editing credentials, the secret access key will not be displayed. Leaving the field blank will keep the current secret. Supplying a new secret will overwrite the existing value.

Setting up 1space Profiles¶

All 1space profiles are listed on the Profiles tab.

Currently, 1space allows for two kinds of profiles for data under its management: Sync and Lifecycle.

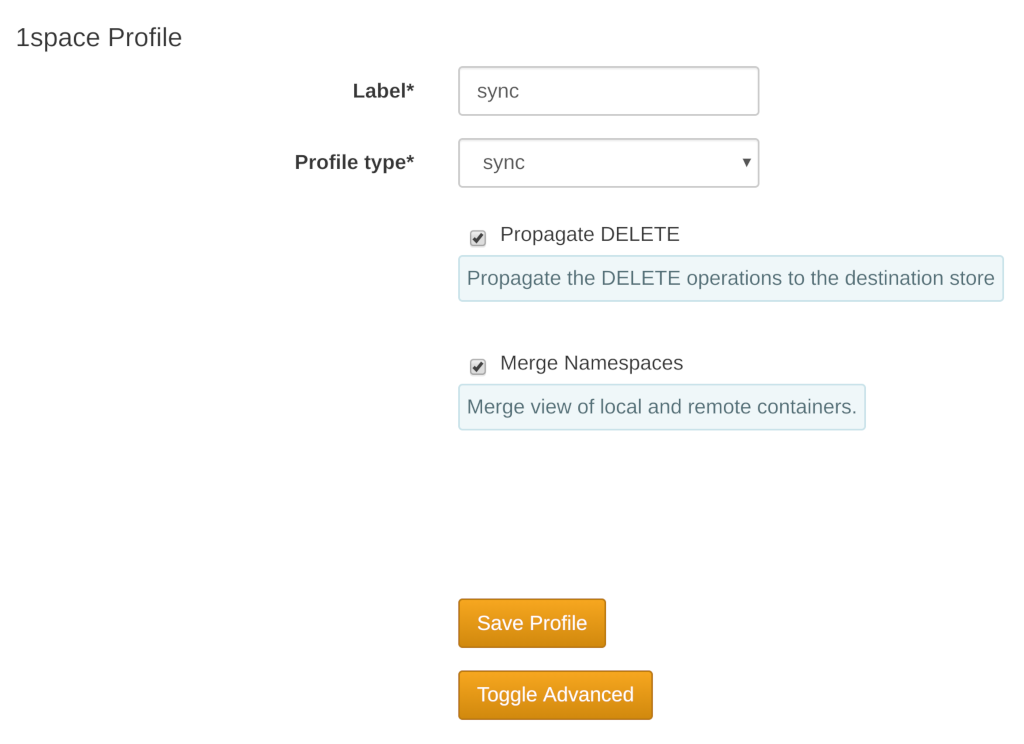

Sync Profile¶

The Sync profile (named sync), will copy data into the remote container (S3/Google Cloud Storage bucket, Azure container, or Swift container) as soon as possible. Any objects removed from the source Swift container will also be removed from the remote store (if present).

Lifecyle Profile¶

The Lifecycle profile copies objects to the remote store after a specified delay. Once an object has been copied, it is also removed from the Swift container. A DELETE request issued against the Swift container will not remove objects in the remote store once they have been copied. In addition, the Lifecycle allows an option to retrieve objects on access. Since the objects are still visible through the Swift API, 1space can place them back into the cluster when a GET request is issued (this is done in-line with serving the request). After restoring the object, it is subject to the same expiration policy (i.e. if the Lifecycle policy expires objects after 1 week, the object will expire after 1 week after restoration).

Advanced profile settings¶

1space profiles have a number of options that are not commonly needed, but allow for additional flexibility. Specifically, the following additional options may be configured:

- Optional Metadata Conditions - allows for applying the profile only to objects that have matching metadata.

- Days to retain archived objects on remote - allows for specifying the expiration of objects copied or moved by 1space in the destination object store.

- Synchronize Container Metadata - whether 1space should propagate container metadata (Swift only).

- Propagate Object Expiration - whether objects' X-Delete-At headers (expiration) should be copied when copying/moving them to the remote object store (Swift only).

- Propagate Expiration Offset - when copying objects' X-Delete-At headers, whether an offset should be applied to the original expiration time (Swift only; required Propagate Object Expiration).

- Synchronize Container ACL - whether containers' Access Control headers should be copied to the remote object store, as well (Swift only).

- Convert DLOs to SLOs - whether dynamic large objects (DLO) should be converted to static large object (SLO) when copied or moved to the remote object store (Swift only).

- Segment size to use when converting DLOs - 1space can optionally combine segments when copying/moving them to the remote store (Swift only).

- Retain Local Segments - whether original segments for a large object should be removed when moving the object to the remote store.

- Exclude matching objects - 1space can be configured to skip objects whose names match a given regular expression (following Python's regular expression syntax).

Do note that some aspects of a profile cannot be changed once set. For example, the number of days to retain objects in the remote store cannot be adjusted. If the expiration time is increased, objects that have already been copied will not be brought back into the cluster. However, new objects' expiration will be governed by the updated delay time. Similarly, changes to DLO conversion, segment size, retention of local segments, or the exclusion of matching objects will only apply to new objects placed into the source container.

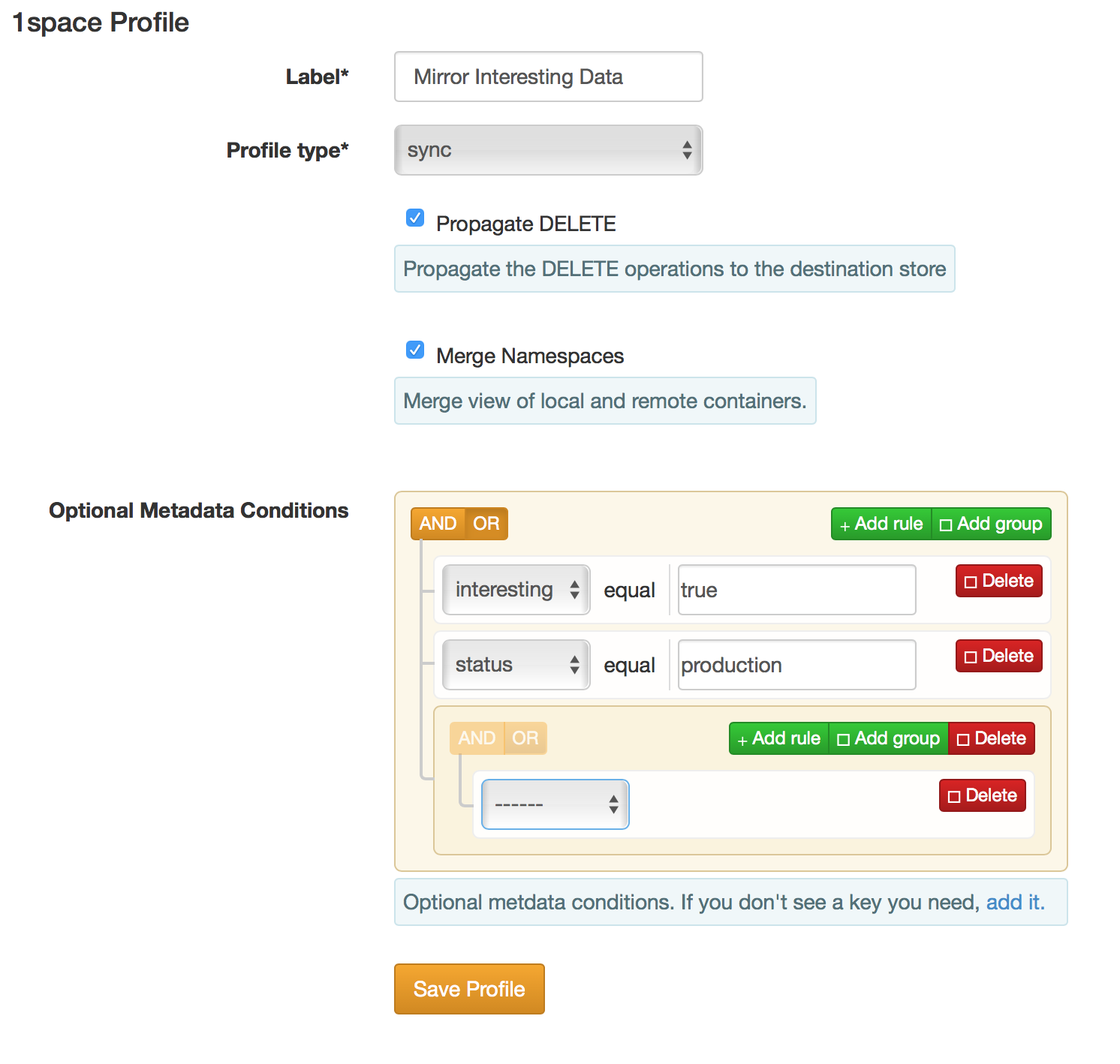

Optional Metadata Conditions¶

Administrators can use object metadata to trigger data movement events. After saving the profile, edit the profile to add the metadata keys to be considered. Then configure the conditions that must be true for the lifecycle or sync event to trigger. The Content-Type key is always available when creating a profile. If you would like to add another metadata key, you can add it by clicking the add it link. Aside from Content-Type, the other metadata keys must be Swift user (x-object-meta- prefix) metadata keys.

This functionality enables a subset of the data in namespace to be moved to a public cloud or remote location.

Examples of usage include:

- Data lifecycle of a data subset for data processing in the cloud

- Triggering lifecycle when a project is done

- Mirroring a subset of active data

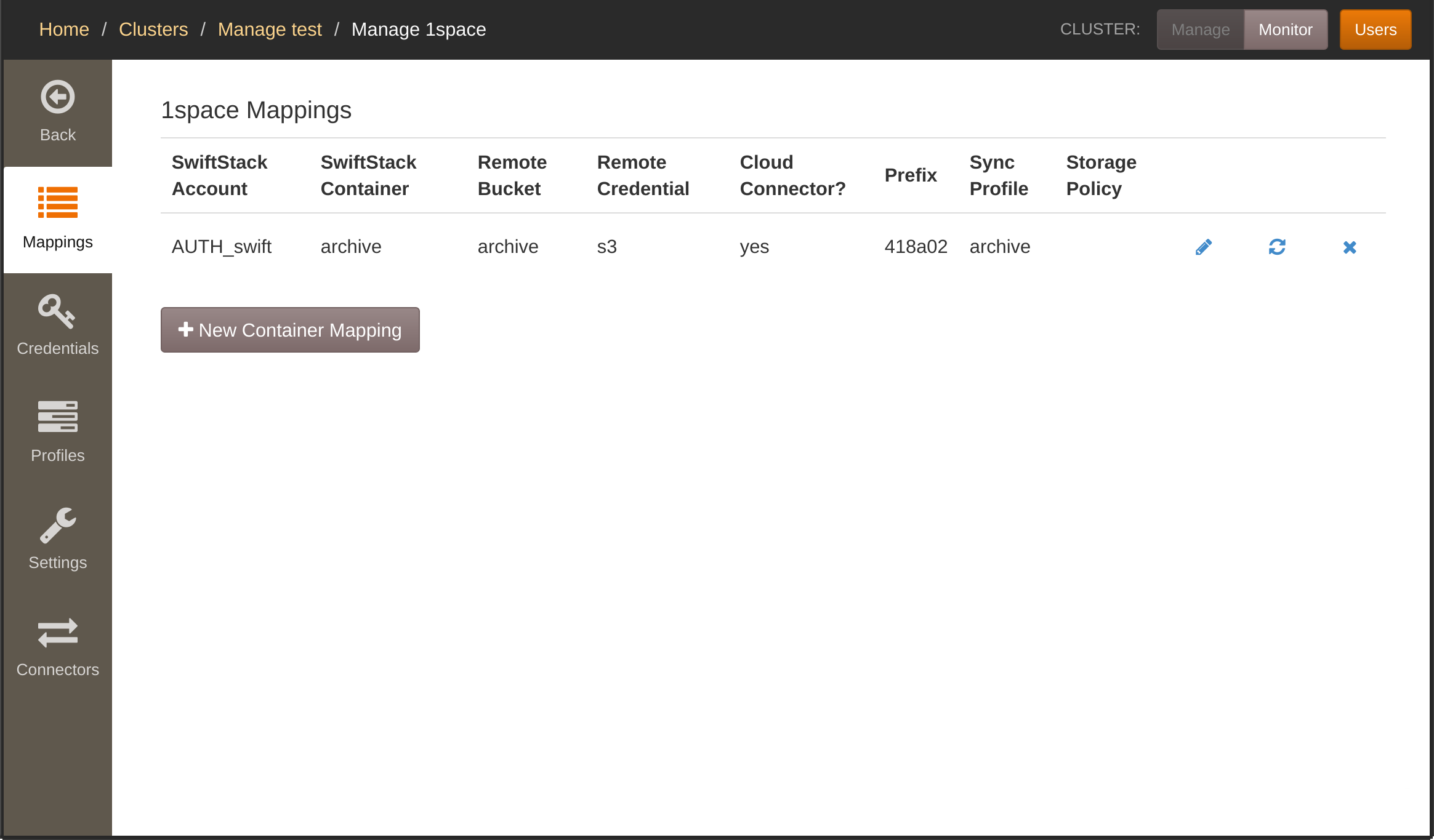

1space Mappings¶

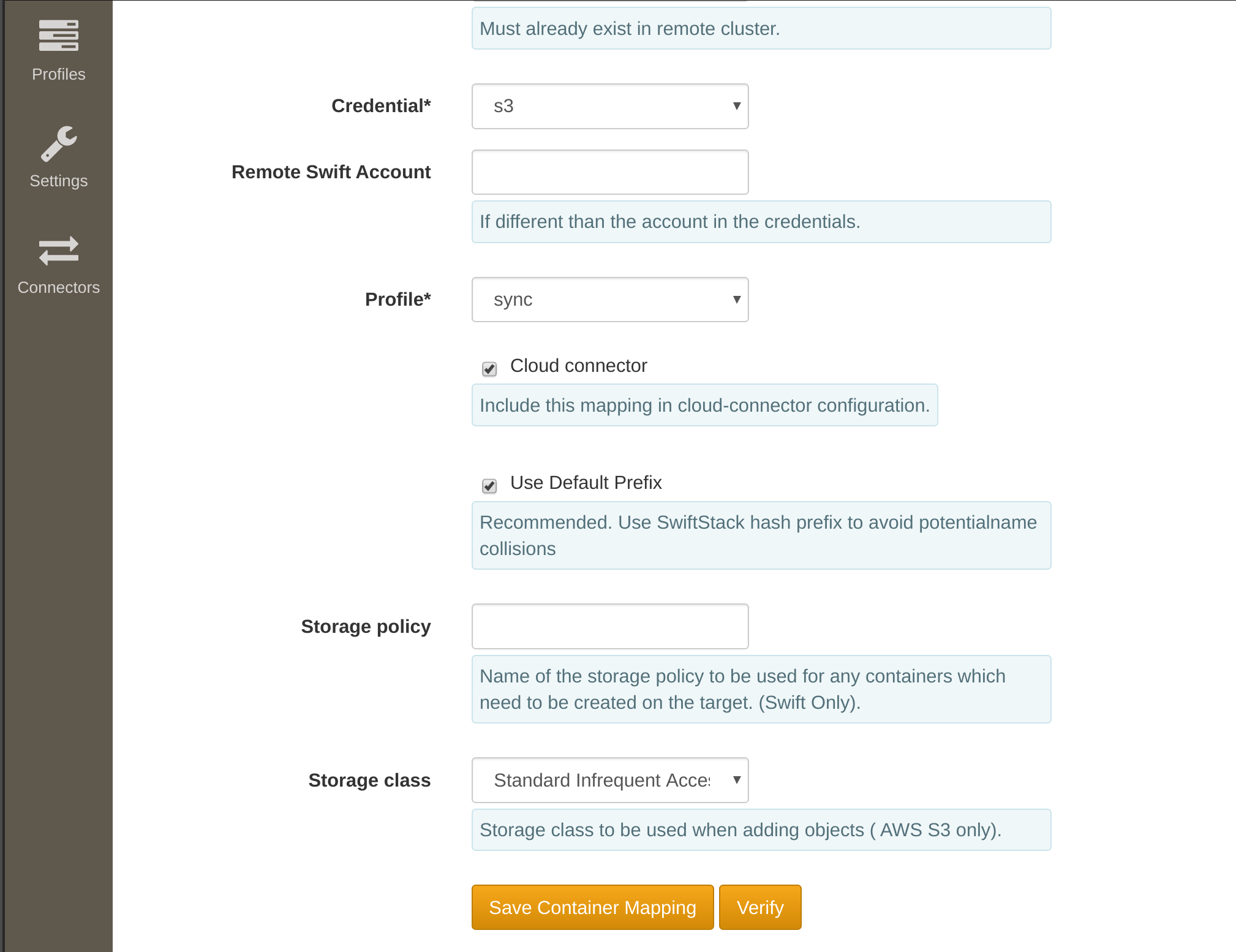

Once at least one set of credentials exists, you can create 1space mappings for existing Swift Accounts and Containers. To do so, from the Mappings tab follow these steps:

Click New container mapping

Enter a Swift Account, Container, the name of the Remote Bucket, and select a Credential and 1space Profile to use.

If cloud-connector should not expose the mapping, uncheck "Cloud connector".

If you don't want to use the recommended default prefix for where shared-namespace data is actually stored inside the "Remote Bucket" in S3 or GCS, uncheck "Use Default Prefix" and enter a custom prefix. If you want the data to be stored in a "faux directory", the custom prefix should end with a forward slash (

/). Leave the prefix blank if no prefix should be used.When objects are copied to a remote Swift cluster, you can specify the Storage Policy to be used for any created containers (e.g. segment containers for SLOs or if copying all containers in an account).

When copying objects to AWS S3, you can specify the Storage Tier.

Click "Verify" to ensure the selected credential set has sufficient permissions and that the Swift cluster nodes can successfully talk to the destination cloud.

Finally, click "Save Container Mapping".

1space allows the administrator to enable sync for all containers in the account to a particular S3 bucket. Each container will be placed with a random prefix in the remote bucket. Please see "Swift object representation in S3" for more details on that.

Before creating the mapping, you can verify that 1space will be able to perform all of its required actions. Clicking the "Verify" button runs a short set of checks against the service provider from one of the nodes in the cluster. The checks include calls to PUT, HEAD, POST (or PUT as a server-side-copy for S3), and DELETE within the specified bucket/container. Please see below for an example IAM policy in Amazon S3 required for these tests to pass.

Afterward, each mapping will appear in the table on the 1space page.

Changing the 1space profile associated with the mapping does not result in reverting prior operations. For example, when changing from the Lifecycle to Sync type profile, the objects that have been archived and expired will not be copied back into the Swift container from the remote store. Similarly, changing from Sync to Lifecycle, will not result in expiring objects that have already been copied to the remote store. You can force 1space to reprocess all of the data in the Swift container using the reset button (see "Resetting a mapping" for more details).

Resetting a mapping¶

It is possible that objects from the remote bucket at some point are removed, through an accident or deliberate action. 1space can repopulate all of the missing objects and ensure that all of the date is replicated. You can use the reset button (↻) next to the affected mapping to trigger this action.

The length of the actual process to re-populate the remote data depends on how many objects have been removed. 1space will continue to copy objects in the background until it has iterated through all of the objects in the Swift container and ensured they are replicated.

If a mapping is configured to sync all containers, resetting the mapping will reset the state for each one of them.

1space Data Location¶

You can find out where the objects are located, by issuing a GET request against the archived container and specifying the json format. 1space will return a content_location entry for each key. It will specify whether an object is in the remote store, in local Swift storage, or both (can happen if the object is restored on a GET).

S3 Specific Information¶

Custom Object Prefix¶

If desired, a specific object prefix may be utilized to specify a specific remote bucket location. This is useful if it is desired to do a 1:1 mapping of a SwiftStack bucket/container to a remote S3 bucket.

A specific location may also be specified for the mapping to connect with a specific path within a remote bucket location.

Note

While a custom object prefix adds configuration flexibility, take care to ensure that the specified custom prefix does not overlap with other locations.

Automatic Hashed Object Prefix¶

To allow for objects from multiple Swift containers to appear in an S3 bucket, the S3 keys include the account and container. To prevent all keys from being stored with the same prefix for a given account, 1space by default prepends a hashed prefix to each key. The prefixes for each mapping are listed in the 1space configuration table and are deterministically derived from the account and container. For example, if there is an object object in a Swift container container under account AUTH_account, it will be stored in the S3 bucket as 62506b/AUTH_account/container/object.

The prefix for each mapping is listed on the 1space page to make it easier to locate data. 1space allows many Swift accounts and buckets/containers to be replicated to S3 under one account.

If an application needs to be able to determine the prefix programmatically, you can implement the following pseudocode:

hash = md5("<account>/<container>")

prefix = long(hash) % 16^6

In python, this would look as follows:

import hashlib

h = hashlib.md5('AUTH_test/test').hexdigest()

prefix = hex(long(h, 16) % 16**6)[2:-1]

The stripping of the leading two characters and trailing character removes the "0x" and "L" from the hex string. In the example above, the prefix would be 2e122e.

Microsoft Azure Blob specific information¶

Data Representation¶

1space uses Azure Blob Block Blobs for all data it copies into Azure. Each block in Microsoft Azure is limited to 100MB, which is much smaller than the maximum default single object size in Swift (5GB). 1space splits Swift objects and their segments (in case of large objects) into 100MB blocks.

Data integrity¶

Microsoft Azure Blob validates data integrity using the CRC64 algorithm. When uploading objects to Azure Blob, 1space verifies the CRC64 response with itsown CRC64 computation of the uploaded bytes.

Naming¶

Microsoft Azure Blob imposes a number of limitations on the acceptable container, blob, and metadata key names, namely:

- container names must be valid DNS names

- blob names must be at most 1,024 characters

- metadata keys must be valid C# identifiers

1space deals with these issues as follows:

- containers with non-conforming names are ignored by 1space

- metadata keys are URL (percent) encoded, with an additional substitution of the percent (%) character for underscore (_)

Listing Objects¶

Microsoft Azure Blob does not provide for a way to resume a listing given an object name (i.e. marker in Swift). When encountering a request to resume a listing given a marker, 1space thus has to list all of the container objects prior to the specified marker.

When listing the entire container, 1space does cache the marker returned by Azure Blob to resume listing on subsequent requests.

Large Objects¶

Static Large Objects¶

1space propagates Static Large Object (SLO) manifests to S3 using the S3 Multipart Upload (MPU) interface. As Amazon S3 restricts the MPU size of each part (except for the last part) to be between 5MB and 5GB, 1space will reject any SLO manifests that have parts outside that range. For example, an SLO that is composed of two objects, where the size of the first object is 1MB and the second object is 100MB would deemed invalid. In such cases, 1space will report an error in the log file, but continue to process the other objects in the container.

When uploading data to Google Cloud Storage, as the provider does not implement the MPU API, 1space converts the SLO manifest into a single stream. This results in a single file upload and is valid for objects up to 5TB -- largest allowable single object size in Google Cloud Storage.

For Microsoft Azure Blob, 1space uses Block blobs. They are similar to SLOs, but have a smaller maximum size. 1space splits up large SLO segments into smaller Azure Blob blocks as necessary. In the end, 1space stores the Content-MD5 of the Azure Block Blob to match the Swift SLO ETag.

In the case of Microsoft Azure Blob, S3, and Google Cloud Storage, 1space uploads the original static large object manifest. These manifests appear under the .manifests namespace and are required in case the large object should be restored back to Swift.

Dynamic Large Objects (DLO)¶

When using Dynamic Large Objects (DLO) and the destination cluster is Swift, 1space will correctly propagate the manifest and its segments. You can also configure a 1space profile to convert DLOs to Static Large Objects (SLOs). At the same time, object segments can either be preserved or combined to a larger minimum size. This is helpful if the original DLO segments were too small.

When converting DLOs to SLOs (or changing the minimum segment size), the original DLO manifest must be an empty object. Further, if the segments for the large object were stored alongside the manifest, you can use the profile's "exclude pattern" option to exclude all objects that match the specified regular expression. This will prevent the issue of the object's segments being duplicated in the destination segments container.

For S3, Google Cloud Storage, and Microsoft Azure Blob, DLOs are uploaded in the same way as the static large objects. The objects are converted to a multipart upload, a single blob, or a block blob, respectively.

Limitations¶

1space does not currently support SLO manifests that have more than 10000 parts. It also does not support SLO manifests composed of other SLO objects. In this case, you should expect the upload to fail or be malformed, depending on whether the nested SLO is at the end of the original manifest.

Lastly, 1space does not support the range option in the SLO manifests. When 1space encounters an SLO with any segment entry that contains a range, an error will be recorded and 1space will move on to other objects.

Configuring Amazon S3 IAM Policy¶

To follow AWS S3 best practices, Amazon recommends using a restricted set of actions. For 1space, the policy can be restricted to a set of buckets on which it operates.

To create an IAM policy, perform the following steps in the AWS Management Console:

- Create an IAM policy as explained below

- Create or select an IAM group and attach the IAM policy to that group

- Assign a user (creating a user if required) into that group

- Capture the access key information to use as the access key / secret key when configuring 1space credentials.

Creating an IAM policy¶

The following requests must be allowed on those resources:

- PutObject

- DeleteObject

- GetObject

- ListBucket

Here is an example of an IAM policy for 1space on a specific bucket (example-1space-bucket):

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:DeleteObject",

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::example-1space-bucket/*"

]

},

{

"Effect": "Allow",

"Action": [

"s3:ListBucket", "s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::example-1space-bucket"

]

},

{

"Effect": "Allow",

"Action": [

"s3:ListAllMyBuckets"

],

"Resource": [

"arn:aws:s3:::*"

]

}

]

- PutObject is required to write objects into S3.

- DeleteObject is required to delete the objects that have been removed from the Swift container.

- GetObject used to check whether an object has already been copied through a HEAD request, 1space issues a HEAD request.

- ListBucket is required for S3 to return 404 (Not Found), as opposed to 403 (Forbidden) if the object is not found (when issuing HEAD).

Lastly, ListAllMyBuckets is required for 1space to validate that credentials are valid when creating a new set.

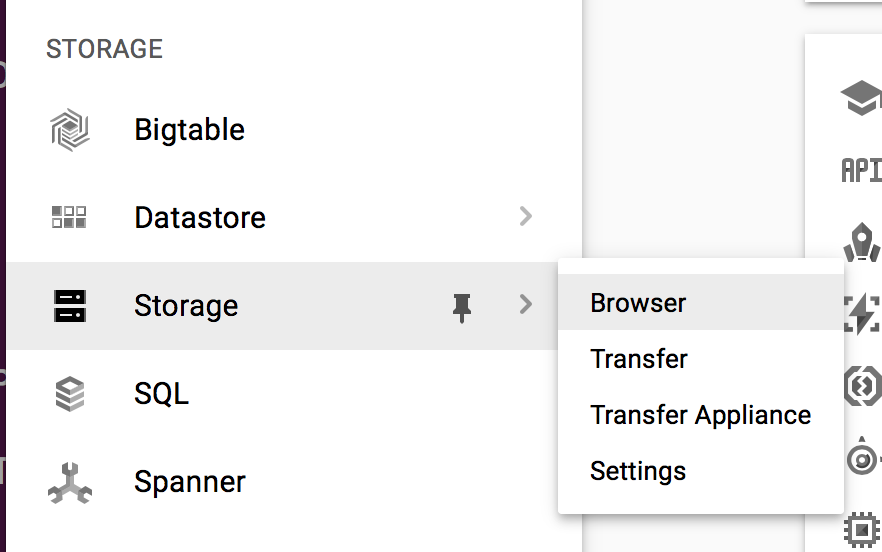

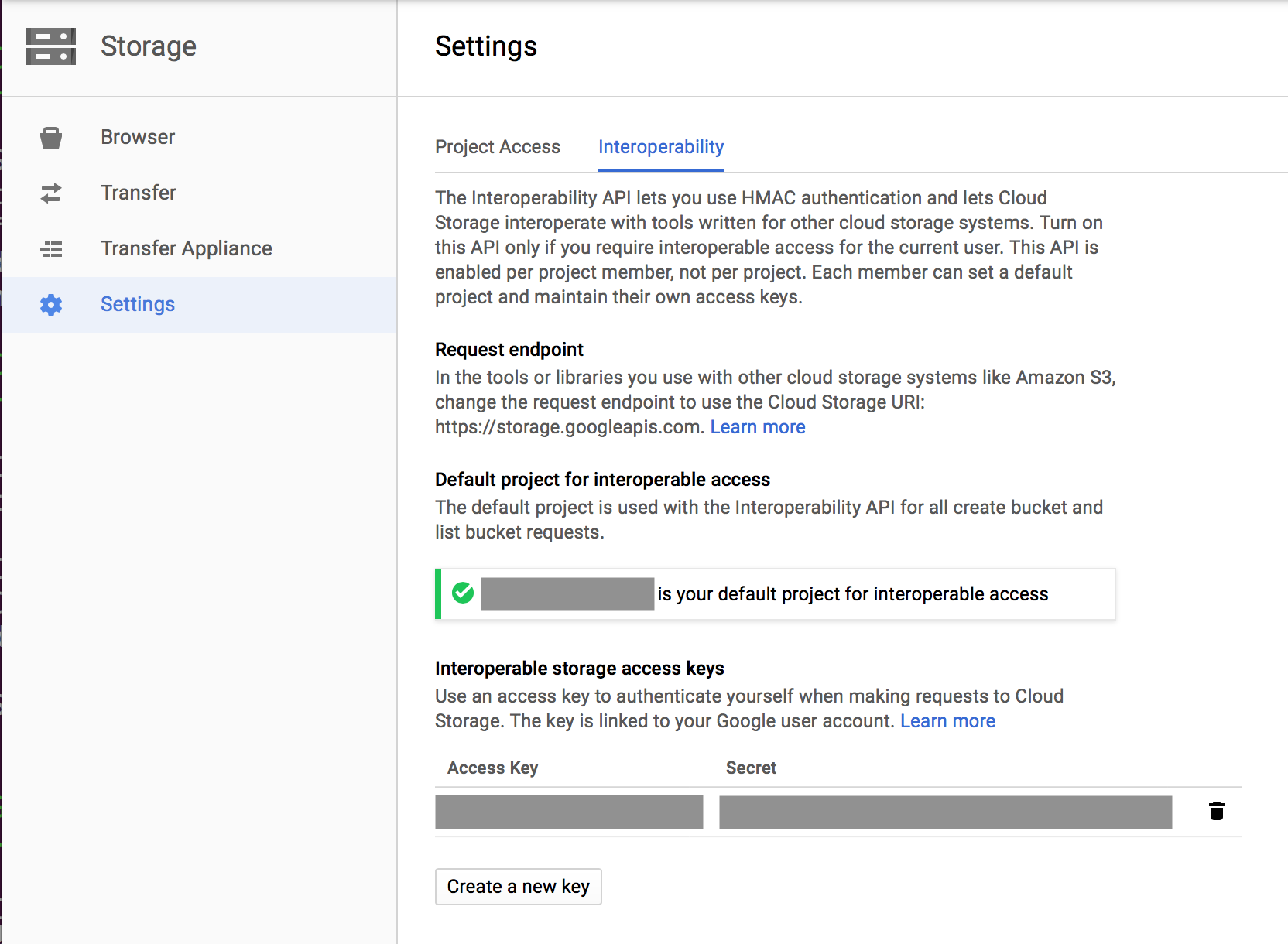

Configuring S3 Interoperability in Google Cloud Storage¶

If you haven't already, enable S3 Interoperability by navigating to the Cloud Storage Settings page.

- Select Interoperability

- Click Make this my default project

- Click Create a new key

- Capture the access key information to use as the access key / secret key when configuring 1space credentials.

For more information see Google's migration guide.

Swift Data Encryption¶

If Swift encryption is enabled, 1space will decrypt the object contents before storing the content in the remote store.