SwiftStack Cluster Hardware Requirements¶

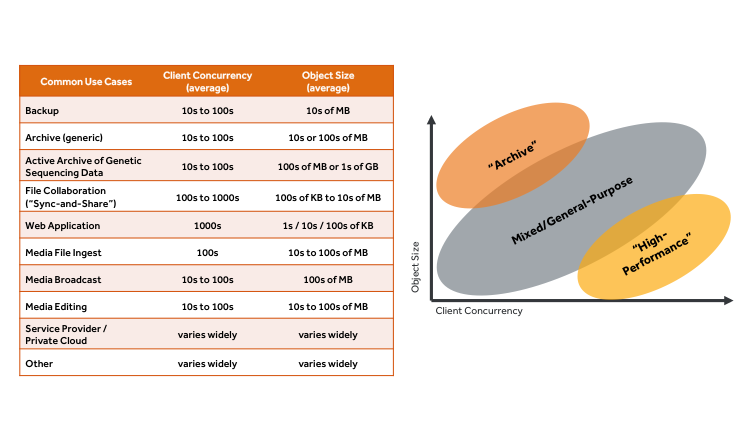

As this section guides you through the hardware selection process for a SwiftStack deployment, it is important to keep in mind your configuration needs for balancing I/O performance, capacity, and cost for your workload. This means, for example, that customer-facing web applications handling a large number of concurrent users will have a different profile than a workload that is primarily archiving large amounts of data.

Networked Nodes Architecture¶

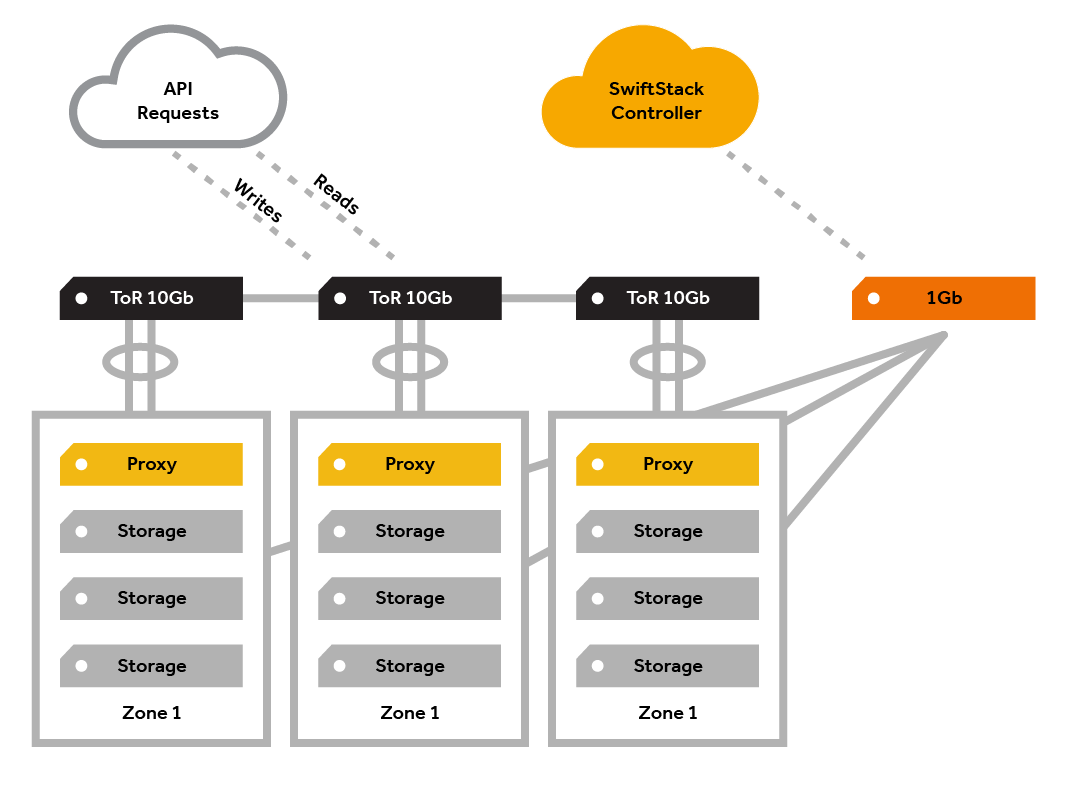

Your production SwiftStack deployment will consist of a number of nodes providing proxy and storage services—connected to each other and to the SwiftStack Controller. The hardware requirements for your cluster will fall into two main categories: networking and nodes. A third category would be used if your organization chooses to have a SwiftStack Controller On-Premises.

Generic cluster topology:

Networking¶

The SwiftStack deployment will be embedded in your current network topology and you will need to determine what hardware requirements and configuration will be related to that (switches, firewalls, etc). You may need to consult with your network engineering team. For the networking of the SwiftStack deployment itself, there will be several different network segments.

Outward-facing network

This front-facing network is for API access and to run the proxy and authentication services.

If you are not using the SwiftStack load balancer, note that you will need to consider how you will use an external load balancing solution to distribute client load between the available proxy servers.

Cluster-facing network

This internal network is for communication between the proxy servers and the storage nodes. Traffic on this network is not encrypted, so this network must be otherwise secured (private).

(Optional) Replication network

This optional internal network is used to separate the data replication traffic from the cluster-facing network. Replication traffic exists when data is redistributed throughout the cluster—such as when a drive failure occurs, capacity is added or removed, or when using write affinity in a multi-region cluster.

Network route to a SwiftStack Controller

All nodes must be able to route IP traffic to a SwiftStack Controller. This could be a SwiftStack Controller On-Premises deployed within your network, or SwiftStack Controller As-a-Service.

Hardware management network

For the IPMI, iLO, etc. used for hardware management.

Tip

When determining the bandwidth capacity needed for each network, keep in mind that each write of an object from a client to a proxy server results in multiple writes to storage servers. If a three-replica storage policy is used, then each object written to a proxy server will result in three (3) writes to different storage servers. So, in that case, to ingest 2 Gbps of data, the proxy server must be able to send 6 Gbps of data to storage servers. Similarly, if an 8+4 erasure coding storage policy is used, then each object written to a proxy server will be broken into eight segments, and four additional parity segments will be created, then all 12 segments will be written to the storage servers. So, in that case, to ingest 2 Gbps of data, the proxy server must be able to send 3 Gbps of data to storage servers.

Load Balancing¶

For information on using the built-in SwiftStack load balancer or an external product, review the Load Balancing documentation.

Node Configuration¶

Zones¶

Nodes are often described by their physical and logical grouping. For the node itself, there is a collection of hard drives (disks) in a server (node) which is generally deployed in a rack. When several nodes share a physical point of failure (like the power to a rack or connection to the same switch) they may be grouped into the same zone. In other words, zones represent tangible, real-world failure domains, and telling Swift where they are helps Swift increase your data availability.

Services¶

A server running the SwiftStack node software will perform one or more of the following services:

- Proxy Services

- Account Services

- Container Services

- Object Services

Proxy services handle all incoming API requests. They receive a request, examine the URL on the object and then determine which storage node to connect to. As data is uploaded to SwiftStack, proxy services will write the data as uniquely as possible in the storage tier. Only when a quorum of writes are successful will the user be notified that the upload was successful.

Proxy services coordinate and handle:

- All incoming API requests

- Responses

- Timestamps

- Load balancing (if running the SwiftStack load balancer)

- Erasure Coding

- Encryption

- Failures

- SSL terminations

- Authentication

The proxy services do not cache objects, but they do use the cache to store other data to improve performance. For example, the proxy services:

- Cache information about an account including the list of its containers

- Cache container data – list of its objects and access-control list information

- Store cname lookups so that an account URL can be mapped to a hostname

- Store static web data (index, CSS, for example)

- Store authentication tokens

- Keep track of client requests when rate limiting is enabled

Account, Container, and Object services are responsible for the following:

- Responding to incoming requests from proxy nodes to store, retrieve, and update accounts, containers, and objects

- Replicating accounts, containers, and objects

- Auditing the data integrity of accounts, containers, and object

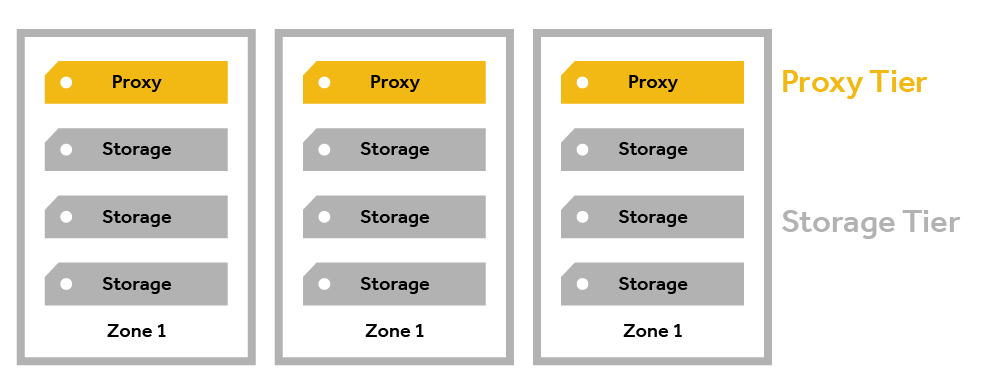

Nodes are typically configured one of three combinations of services:

- Proxy, Account, Container (PAC)

- Object Services (O)

- All Services (PACO, "combined," "Swift," "all-in-one")

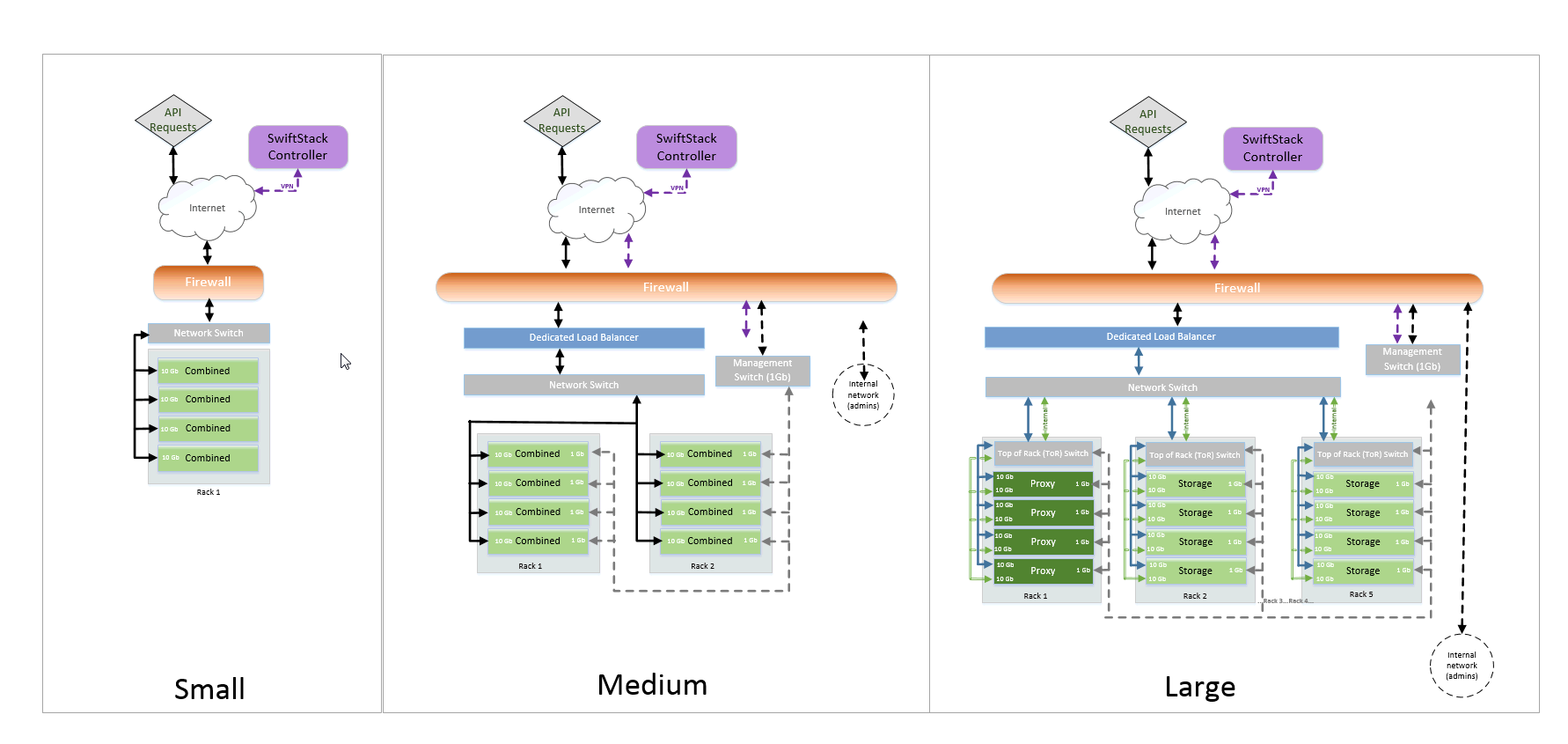

Smaller configurations (i.e., less than 10PB usable) generally use combined "PACO" nodes, while larger configurations may have separate proxy (P) and storage (ACO) nodes on physical servers. The different node types will have different configurations, as the proxy nodes require relatively more CPU and only a few disks, while the storage nodes need relatively less CPU and a larger number of disks

- For the following diagram, consider the following rough capacity sizes:

- Small: Less than 10 PB usable capacity

- Medium: 10 - 30 PB usable capacity

- Large: 30+ PB usable capacity

General Hardware Information¶

While nodes share basic hardware configuration (chassis, CPU, RAM, hard disk and network cards), specific configurations may require additional components.

Warning

An important point to note is that RAID should not be used for the storage nodes. SwiftStack provides durability and availability by distributing across multiple nodes, and if there is a disk-level failure in SwiftStack, the request will be routed to another location. If that system is using RAID, it will mask the failure and provide very high latency while rebuilding. Also, parity RAID is often slow during writes due to its poor random-write characteristics.

About components:

| Chassis | Standard 1U-4U chassis are generally used. |

| CPU | Intel Xeon E5-2600 series or comparable AMD CPUs are common. See the individual node guidance below. |

| RAM | See individual node guidance below. |

| Storage disks | 7200-RPM enterprise-grade SATA or SAS drives. 6TB or 8TB 7200-RPM SATA drives tend to provide good price/performance value today. Since the system does not use RAID, each request for an object is handled by a single disk. Therefore, faster drives may increase single-threaded response rates, but the slight performance benefit of 10k- or 15k-RPM drive is rarely worth the additional cost. SwiftStack does not recommend using “green” drives, as the software is continuously ensuring data integrity, so the power-down/power-up functions of green drives may result in excess wear. SwiftStack also currently recommends against the use of SMR drives in SwiftStack clusters. |

| OS disks | Minimum 100 GB |

| SSDs | Only used for Account & Container services; see individual node guidance below. |

| Network cards | For outward-facing and cluster-facing networks, use single or dual-port 10 GbE adapters. For out-of-band hardware management (e.g., IPMI, iLO) and in-band cluster management traffic to the SwiftStack controller, use single-port 1 GbE adapters. |

| RAID Controller Cards | As described above, RAID cards should be avoided wherever possible. If one is used, set it in the "JBOD" or "pass-through" mode if one is available. If no "pass-through" mode is available, create one RAID 0 group on each drive (e.g., in a storage node with 24 drives, there would be 24 RAID-0 "volumes" each containing one drive). |

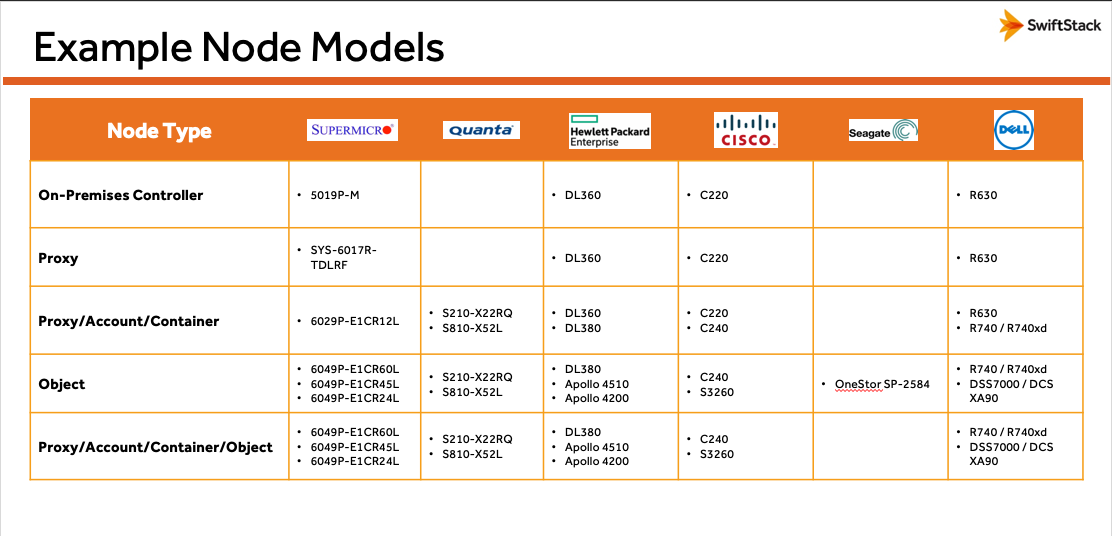

Example Server Models¶

Hardware vendors introduce and modify their server products and prices regularly, so rather than providing guidelines with specific makes and models that may not be the best fit for your configuration, we encourage you use the specifications (disk size, processor speed) of hardware that is available to you in the guidelines below to determine the best configuration for your deployment. With that in mind, this table provides a sampling of common models deployed in production among SwiftStack customers today:

Specific Hardware Sizing¶

On-Premises Controller¶

| CPU |

|

| Memory |

|

| HDDs |

|

| SSDs |

|

| Network |

|

| Notes |

Proxy/Account/Container/Object (PACO) Nodes¶

| CPU |

|

| Memory |

|

| HDDs |

|

| SSDs |

|

| Network |

|

| Notes |

|

Proxy Nodes¶

| CPU |

|

| Memory |

|

| HDDs |

|

| Network |

|

| Notes |

|

Proxy/Account/Container (PAC) Nodes¶

| CPU |

|

| Memory |

|

| HDDs |

|

| SSDs |

|

| Network |

|

| Notes |

|

Account/Container/Object (ACO) Nodes¶

| CPU |

|

| Memory |

|

| HDDs |

|

| SSDs |

|

| Network |

|

| Notes |

|

Object (O) Nodes¶

| CPU |

|

| Memory |

|

| HDDs |

|

| Network |

|

| Notes |

|

Example Configuration¶

Stated Requirements

A SwiftStack customer asked for assistance developing a hardware configuration with the following basic requirements:

- Usable Capacity: 3 PB

- Throughput: 5 GB/sec ingest

- Use Case: Backup workload using industry-leading backup software

- Median object size: 5MB

- Regions: 3 data centers

- Data Protection: 3 replicas of each object

- Hardware Preference: Considering two vendors—"Vendor A" offers a 4u 60-drive server, and "Vendor B" offers a 4u 90-drive server

Configuration Logic

Using a combined node (PACO) is preferred, if possible. There are some compelling reasons to separate out the Proxy function from the ACO services. These might be for security purposes (network isolation), or perhaps for controlled scale-out of performance and/or capacity (think backups with retention or time requirements.)

PACO Nodes

The requirement for 5 GB/sec of ingest throughput equates to roughly 5 * 8 bits/byte = 40 Gb/sec on the "outward-facing network" and 40 * 3 replicas = 120 Gb/sec on the "cluster-facing network;" therefore the total proxy tier throughput required is 40 + 120 = 160 Gb/sec.

Assume each node is capable of approximately 20 Gb/sec (2 x 10GbE NIC) total throughput (i.e., 5 Gb/sec "outward-facing" and 15 Gb/sec "cluster-facing," then we need approximately 8 proxy nodes. This provides us with a minimum number of nodes to meet the network requirement.

To get 3PB of usable capacity with three replicas of each object, roughly 9PB of raw capacity is required. If desired, you can also account for the difference between TB and TiB and/or add some capacity (e.g., 20%) to ensure the cluster has room to continue its data availability and integrity operations when it is "full."

Using 12TB HDDs, for example, 9PB of raw capacity requires approximately (9 * 1024) / 12 = 768 HDDs.

Node Count

The number of object nodes will depend on the vendor chosen:

- A: 768 / 60 = ~13 servers

- B: 768 / 90 = ~9 servers

For even distribution of nodes across the three desired regions, we may choose to partially populate or slightly over-provision raw capacity in 15 servers from Vendor A.

CPU

For a backup use case, a ratio of 1 CPU core to 3 HDDs is recommended:

- A: 60 / 3 = 20 cores / 2 sockets = 10+ core CPUs (assuming dual-socket)

- B: 90 / 3 = 30 cores / 2 sockets = 15+ core CPUs (assuming dual-socket)

Memory

The number of drives in each chassis will determine the amount of memory that we need.

- Vendor A: 256GB

- Vendor B: 384GB

Based on the below memory rules

- 30 drives or less - 128GB

- 30 - 60 drives - 256GB

- 60+ drives - 384GB

HDDs

Include 2 boot drives

SSDs (Account/Container policy)

Using the calculation of 3.9K of SSD per unique object in the cluster, we would need approximately 11.7TB of SSDs (3PB / 5MB avg object size * 3.9K = 2.34TB). If we take the number of nodes for each vendor and divide this amount, we can determine the minimum SSD size for each node.

- Vendor A: 2.34TB / 15 = 156GB+ per node

- Vendor B: 2.34TB / 9 = 260GB+ per node

Network

Include 2 x 10GbE Network cards Note: From the proxy calculations above, there will be approximately 120 Gb/sec of "cluster-facing" network traffic. Divided by 9-15 object nodes, that is roughly 8-13 Gb/sec per object node when all are online.

Regions, Zones, & Data Center Racks

The customer request was for three regions and three replicas, so the math above preferred multiples of three for even distribution. With Vendor A, for example, we will have 15 PACO nodes—or 5x PACO nodes per region. 5 x 4U PACO nodes will easily fit within a common 42u data center rack along with top-of-rack switches for data traffic and hardware-management, so each region could be configured to contain only a single zone.